Radiation is fascinating. I have had friends and relatives die of cancers and until fairly recently, radiation therapy was not an option. Now, radiation therapy is getting close to being my preferred treatment should I happen to get cancer. Malignant cells are pretty easy to kill, it is not killing the patient that is the trick. Surgical removal is still the most popular treatment by doctors, but I have seen too many friends have large sections of things cut out only to find that the cancer is still active. Chemotherapy has some effectiveness, but a lot of side effects. Done properly, radiation therapy does the job quite well with not a lot of side effects.

While looking into radiation, both as a therapy and as an energy source, I have picked up on a few interesting things. Since the world is freaked out over Fukushima fall out, I thought I would start putting some facts, as I understand them, down in a post. This post may grow as I learn more or scientists learn more about this fascinating subject. I do recommend that all new Geiger counter owners read this and much more before worrying themselves to death over radiation. Stress related to radiation fear is just as big of a health problem as the radiation itself.

There are three main types of radioactive isotopes released by Fukushima that cause concern, Iodine 131, Cesium 137 and Strontium 90.

Iodine 131 has a half life of about 8 days. The half life, or time it takes for half of the concentration to decay, is short which is good and bad. It is good because after 5 half lives, 40 days, it is pretty much gone. It is bad because it is very active during its biological half life. Biological half life is the time it takes the body to pass half of the concentration.

Each decay is a radioactive pop. In Iodine 131's case the pop is a beta particle or electron with some gamma radiation. Each pop releases a certain amount of energy which is what damages the cells of the body. The beta particle damages cells close to the pop and the gamma rays can travel further. The decay of I131 is in two steps. The first is beta decay to Xenon 131 which then emits gamma radiation. The average total energy of a Iodine 131 pop is 971 KeV 0r 971 thousand electron volts.

The biological half life of I131 is a little tricky. I131 many is absorbed after ingestion by the thyroid gland. If absorbed by the thyroid, its biological half life is longer than it radiative half life. If not absorb by the thyroid the biological half life is shorter. For this reason, the biological half life is not commonly published.

I131 also impacts health oddly. Smaller amounts do more damage than larger in most cases. For these reasons, the relatively short half life, affinity for the thyroid and smaller hazardous dose, Iodine 131 is generally the most dangerous of the radioactive fallout. Large doses of stable Iodine reduce the amount of radioactive iodine that can be absorbed by the thyroid. Because of its short radioactive half life, Iodine 131 is not a long term problem.

Cesium 137 or Caesium 137 has a half life of about 30 years. Unlike Iodine, cesium 137 is chemically similar to potassium and Rubidium. Potassium is a common electrolyte used mainly in the muscles of the body, though some may be absorbed in the bones. Cesium 137 also has a two step decay. Cs137 decay to Barium 137 releasing a beta particle and the barium 137 releases gamma radiation. The total energy released is 1176 KeV of 1176 thousand electron volts. This is a little more energy than the Iodine, but not much. Cesium 137 has a biological half life of 70 days. Prussia Blue is an antidote for Cesium 137. Since the cesium 137 is treated as potassium by the body, maintaining proper electrolyte levels reduces the amount of cesium 137 absorbed.

Strontium 90 has a half life of about 29 years. Strontium 90 is a bone seeker. It is treated like calcium in the body, can cause bone cancer and leukemia. It has a three step decay to Yttrium 90 with a half life of 64 hours and then Zirconium 90 with total beta decay energy of near 2800 KeV or 2800 thousand electron volts. More than twice the decay energy of Iodine 131 or Cesium 137, but second decay to Zirconium 90 has the most energy at 2200 KeV. As a bone seeker, Sr 90's biological half life is indefinite if absorbed by the bones or teeth. 70 to 80 percent of ingested Sr 90 passes quickly through the body without being absorbed. Because of the high energy and absorption in bone and teeth, Sr 90 has a greater probability of causing cancer.

Research by the Radiation and Public Health Project has indicated that Strontium 90 released during nuclear tests and near nuclear reactors has caused elevated concentrations in the public and increased cancer rates. The reports have been criticized by the Nuclear Regulatory Commission and the results available online appear to be inconclusive and poorly compiled. Per the study, approximately 1.6% of the respondents had some form of cancer and the majority of the cases were from 1962 to 1964, dramatically decreasing after 1964. The Wikipedia editor that referenced the report included a link to a New York Times article and not the actual report. Comparing the reported results to the Center for Disease Control National Vital Statistics Summary , there does not appear to be any verification of the reported results.

While Strontium 90 is clearly likely to cause cancer in sufficient dosage, there is no credible evidence that dosage under the regulatory limits of NRC pose any significant increase in cancer risk.

For example, ingesting 100 becquerel equivalent of Sr 90, the body may retain 30 becquerel equivalent or 30 pops per second. The average energy per pop would be 1400 KeV, about 40% greater than the average, so the damage would be roughly equal to 42 pops or counts per second. For an average adult, this is less than 1/1000 of the normal background radiation produced by your own body and a much smaller fraction compared to the total normal background radiation. Since Strontium 90 is a small percentage of the total radiation released at Fukushima, the 100 becquerel example is probably quite high. I will review the reports, but Sr 90 was less than 1 percent of the radioactive isotopes released with 3.2 to 32 becquerel per kilogram found in only a few soil samples.

I will continue after further research to find a more compelling peer reviewed report if one exists.

With nuclear radiation there is a lot of contradictory information available. Like the Strontium study, statistics can be very misleading because of the natural occurrence of cancers that may be linked to man made radiation, but existed naturally before the start of man made atomic fission and increased not because of radiation but improvements in health care, changes in environment and exposure to other non radioactive carcinogens. One major factor that is missing from the more controversial studies is with increased life expectancy, the causes of death change. In the fifth and sixth decade cancer is the more prominent cause of death which is more likely due to hereditary and environmental factors, than man made radiation exposure.

These studies are also complicated by the paradoxical "Vaccine" effect of some low levels of certain types of radiation exposure. Lower levels of Iodine 131 tend to increase thyroid cancers while very low levels of tritium, (the radioactive isotope of hydrogen) tend to reduce thyroid cancers. To attempt to simplify the risk of adverse health impact by exposure to man made radiation, the increase in radioactive decay energy may be a useful tool.

As a general rule of thumb, more rapid decay energy absorption is more detrimental to health. This is far from a perfect rule of thumb as parts of the body respond differently to radiation levels.

Counts or pops per second are the simplest measure decay energy. Isotopes with shorter half lives pop more quickly. Isotopes with half lives shorter than hundreds of thousands of years are generally man made. On average, the human body contains radioactive isotopes that result in 4,400 pops per second per 75 kilograms of mass. The 75 kilograms is considered the average mass of the average human. Since few people are truly average, 60 pops per kilogram or 30 pops per pound are good baseline numbers to use for radioactive health purposes. Depending on environment and lifestyle there can be a significant variation from this baseline per individual. Since hundreds of thousands of people have or plan to buy Geiger counters, don't freak out if your baseline is higher, because the normal background radiation where you live can be much higher than your body mass baseline.

The Becquerel is defined as the decay of one nucleus per second. Because of radioactive potassium 40 producing the pops, the average kilogram of human mass has a Becquerel count of 60 Bq/kg. Animal testing on dogs primarily, have been used to determine the lethal dose for 50% of the population or LD50. A test on Beagles with Cesium Cloride did not publish the LD50, (the paper is behind a pay wall) in its abstract, but the LD50 from the information available is greater than 1900 micro Curries per kilogram. 1900 micro Curries per kilogram is equal to 70.3 million Becquerel per kilogram which is considerably larger than 60 becquerel per kilogram. As an estimate, 1/1000 of 70.3MBq would be 70.3 KBq or 70,300 Becquerel per kilogram which is where possibly a statistically significant adverse health impact could easily be determined.

Beagles are not people and the test was not designed to determine increased incidence of cancers due to radiation. Still, the 70,300 Becquerel per kilogram implies a maximum exposure limit that may possibly not cause adverse health impact, but probably a measurable health impact. Definitely a when to be worried point.

Studies of Chernobyl offer more information on direct human impacts of radioactive Cesium. Studies by the former Soviet Union Government are appropriately questionable. Studies by international agencies are much more likely to be trust worthy, though they may tend to be overly critical in some cases due to legal and political issues. The most likely studies to be overly critical are studies using linear no thresh hold models. These models easily confuse normal mortality rates with possible radiation impacts. Political influence may have caused lower impact estimates by international agencies with interests in nuclear power. It is a tangled mess, but there are still some reasonable conclusions that can be drawn, if you wish to be unbiased.

First, studies of the individuals actively involved in the containment of the incident provide a reasonable maximum impact. While criticized by anti-nuclear advocates, The WHO reports indicates that approximately 9000 excess cancer deaths for a population 600,000 exposed to the highest radiation levels of approximately 40,000 Becquerel per meter squared. This estimate includes iodine 131 death rates which are extremely high due to poor emergency procedures employed by the former Soviet Union and somewhat surprisingly France. It also includes emergency workers exposed to much higher direct radiation levels. It should be noted that direct exposure caused fewer deaths than ingestion of radioactive isotopes.

Second, countries near the Chernobyl site conducted individual studies and established maximum safe radiation levels for food products. The EU for example has a 600 Becquerel per kilogram limit on food products. The UK placed a limit of 1000 Becquerel per kilogram on sheep meat. Wild game, boar in particular, were found to have levels up to 40,000 Becquerel per kilogram with an average of 6800 becquerel per kilogram. The EU and UK limits were established where there is no statistically significant cancer risk. There is a gray area between the safe limit for humans at ~1000 Becquerel per kilogram and animal limits of approximately 40,000 to 70,300 Becquerel per kilogram.

While animals are not people, the food limits that apply to meats is an indication the human radiation levels can be considerably higher than the average 60 pops per kilogram and still be safe.

Where to draw the concern line is a personal decision. Twice average should not be a level of concern, but ten times average may be. The type of isotope needs to be considered, Iodine 131 because it is thyroid specific. Strontium 90 because it is bone specific and higher energy. There is no indication though that limits set by nations for food stuffs and water supplies pose any significant health risk.

If you happen to be the proud new owner of a Geiger counter, you may wish to establish your own body mass baseline. If you properly allow for background radiation, 60 pops per second/kg is perfectly normal. 120 pops per second/kg is very likely to be safe. 360 pops per second/kg may be your concern thresh hold. With 600 to 1000 pops per second/kg a definite level of concern. Remember that background levels are not only higher, they can be variable. Your fancy new Geiger counter may not have the sensitivity to do anything but scare you or give you a false sense of security. If it provides you some piece of mind, read up on the limitations of your Geiger counter to avoid freaking yourself and others out with erroneous readings and interpretations. If you are confident in your ability to properly use your new Geiger counter, think about publishing your results, including methods, online. Note: I dropped a sentence, Trying to measure your body mass radiation level with a Geiger counter will not give you a number to be confident in, properly calibrated it can give you an indication of change in radiation level, like testing food.

After Thoughts:

"The report said cancer risk from exposure to between 100 and 200 millisieverts is 1.08 times higher when compared with people who weren't exposed, while the cancer risk of people whose body mass index was between 30 to 39.9 was 1.22 times higher than a group of people whose body mass index was between 23 and 24.9. The cancer risk was 1.6 times higher for a group of people who smoke, when compared with nonsmokers, it said.

"The risk of cancer incidence is not zero even at low doses. . . . But the levels we are now exposed to are not something people have to worry deeply about," said Ikuro Anzai, a professor emeritus at Ritsumeikan University who has criticized the safety of nuclear power plants for decades.

"Many people get scared simply by hearing the word radioactivity. But we have to base our worries on reality. It is very difficult, but we need to have rational fears," said Anzai, an expert on radiation protection." I found this published in the Japan Times after I posted this. It is excellent and by someone with anti-nuclear leanings.

Still, the units used to describe radiation levels are confusing. That is the main reason I put this together using the Becquerel/kilogram or counts per second which most of the Geiger counter buyers will have as a reading. As I have mentioned in other posts, there is no direct conversion from Becquerels to millisieverts, though ingested radiation allows a somewhat reasonable comparison. A millisievert is a small unit and a becquerel is a very small unit, so don't confuse them, stress can be pretty bad for your health too.

Efficient alternate energy portable fuels are required to end our dependence on fossil fuels. Hydrogen holds the most promise in that reguard. Exploring the paths open for meeting the goal of energy independence is the object of this blog. Hopefully you will find it interesting and informative.

Monday, May 30, 2011

Incorrectly Correcting the Banana Equivalent Dose

Wikipedia is a living encyclopedia that changes with global events. The Banana Equivalent Dose entry has been corrected since the Fukushima incident, but was it really corrected?

History

The banana equivalent dose was introduced as a way to clarify the risk of radiation exposure that results from human activity, such as the use of nuclear power or medical procedures, by comparing it with the risk associated with natural doses. The BED calculation probably originated on a nuclear safety mailing list in 1995, where a value of 9.82×10-8 sieverts or about 0.1 μSv was suggested.[1] However, that calculation has been criticized as misleading,[2] since excess potassium ingested (in the form of a banana) is quickly eliminated by the body.

In 2011, as the Fukushima nuclear disaster unfolded, the idea was popularised on xkcd[3] and slashdot.[4]

From this change it would seem that the BED is misleading, but the implication that the BED is misleading is more misleading than the original use of the BED.

The editor of the article implies that since the body constantly cycles potassium that the level of radioactive potassium 40 would remain constant and that other sources of radiation would not. While different types of radioactive isotopes do effect the body differently, the revision trivializes BED by not going into depth as to were it is effective and ineffective. Indicating that the editor may be emotionally or politically motivated.

One of the primary radioactive isotopes of concern following Fukushima is Cesium 137 contaminating food. Cesium 137 is chemically similar to potassium and like potassium also passes through the body. There are differences in the way Cs 137 and K40 react in the body. K40 has a half life of 1.3 billion years versus Cs137 with a half life of 30.7 years. Since Cs137 has a shorter half life, it tends to "pop" or have counts per second more often, so a smaller quantity of Cs137 produces the same quantity of radiation as a much larger quantity of K 40. However, the radiation concentration is based on "pops" so the health impact of Cs137 is virtually identical to potassium 40 at the same level of radiation. The editor's logic falls apart by assuming an atom for atom equivalent instead of a count per second equivalent. Based on count per count, the probability that a greater quantity of Cs137 will be absorbed than K40 is unlikely. The excess of each would be equally likely to pass through the body.

In the case of radioactive iodine, the BED dose needs to be qualified. Unlike potassium, iodine has a more limited role in the human body. Iodine is preferentially concentrated in the thyroid and low doses of radioactive iodine 131 are paradoxically more dangerous than high doses.

Like Iodine 131, where stable iodine reduces the absorption of radiation in the thyroid by filling iodine receptors, maintaining proper electrolyte levels reduces the absorption of Cesium 137 in the body.

With the exception of radioactive isotopes that have a greater tendency to accumulate in certain organs or glands, the impact per "pop" or count varies little in the human body. The Banana Equivalent Dose is an effective method of communicating radiation impact with limited qualifications.

It will be interesting to see if the Wikipedia Banana Equivalent Dose editor can revise his revision with a little more concrete wording and citations.

History

The banana equivalent dose was introduced as a way to clarify the risk of radiation exposure that results from human activity, such as the use of nuclear power or medical procedures, by comparing it with the risk associated with natural doses. The BED calculation probably originated on a nuclear safety mailing list in 1995, where a value of 9.82×10-8 sieverts or about 0.1 μSv was suggested.[1] However, that calculation has been criticized as misleading,[2] since excess potassium ingested (in the form of a banana) is quickly eliminated by the body.

In 2011, as the Fukushima nuclear disaster unfolded, the idea was popularised on xkcd[3] and slashdot.[4]

From this change it would seem that the BED is misleading, but the implication that the BED is misleading is more misleading than the original use of the BED.

The editor of the article implies that since the body constantly cycles potassium that the level of radioactive potassium 40 would remain constant and that other sources of radiation would not. While different types of radioactive isotopes do effect the body differently, the revision trivializes BED by not going into depth as to were it is effective and ineffective. Indicating that the editor may be emotionally or politically motivated.

One of the primary radioactive isotopes of concern following Fukushima is Cesium 137 contaminating food. Cesium 137 is chemically similar to potassium and like potassium also passes through the body. There are differences in the way Cs 137 and K40 react in the body. K40 has a half life of 1.3 billion years versus Cs137 with a half life of 30.7 years. Since Cs137 has a shorter half life, it tends to "pop" or have counts per second more often, so a smaller quantity of Cs137 produces the same quantity of radiation as a much larger quantity of K 40. However, the radiation concentration is based on "pops" so the health impact of Cs137 is virtually identical to potassium 40 at the same level of radiation. The editor's logic falls apart by assuming an atom for atom equivalent instead of a count per second equivalent. Based on count per count, the probability that a greater quantity of Cs137 will be absorbed than K40 is unlikely. The excess of each would be equally likely to pass through the body.

In the case of radioactive iodine, the BED dose needs to be qualified. Unlike potassium, iodine has a more limited role in the human body. Iodine is preferentially concentrated in the thyroid and low doses of radioactive iodine 131 are paradoxically more dangerous than high doses.

Like Iodine 131, where stable iodine reduces the absorption of radiation in the thyroid by filling iodine receptors, maintaining proper electrolyte levels reduces the absorption of Cesium 137 in the body.

With the exception of radioactive isotopes that have a greater tendency to accumulate in certain organs or glands, the impact per "pop" or count varies little in the human body. The Banana Equivalent Dose is an effective method of communicating radiation impact with limited qualifications.

It will be interesting to see if the Wikipedia Banana Equivalent Dose editor can revise his revision with a little more concrete wording and citations.

Sunday, May 29, 2011

The Fallout Over Moving the Radiation Goal Post - Japan's Radiation Issues

The Japan Probe website has a new post on the changing maximum radiation limits in Japan. "Several weeks ago, the Japanese government raised the acceptable maximum annual radiation dosage standard for children from 1 millisieverts to 20 millisieverts (about 3.8 microsieverts a day). The decision outraged many parents, who feared that the new standard meant that their children would be exposed to unhealthy levels of radiation. The Education Ministry initially responded by stating that there was non intention to allow 20 millisieverts of exposure, and that it actually expected that exposure to children would not exceed 10 millisieverts of radiation a year."

In past posts here, I have mentioned this problem and the need for more realistic standards for radiation. Despite what anti-nuclear groups say, there is solid scientific evidence that low dosages of ionizing radiation are not harmful. I have even given reasonable standards based on areas of the world with much higher background radiation and limits that are applicable to workers in nuclear technologies. Thanks to science fiction, uneducated anti-nuclear activists and government agencies of questionable competence, there will continue to be needless confusion on what is allowable without detectable increase in health risk.

"Although radiation may cause cancers at high doses and high dose rates, currently there are no data to establish unequivocally the occurrence of cancer following exposure to low doses and dose rates – below about 10,000 mrem (100 mSv). Those people living in areas having high levels of background radiation – above 1,000 mrem (10 mSv) per year – such as Denver, Colorado, have shown no adverse biological effects." The above is taken from the US Nuclear Regulatory Commission radiation fact sheet. Ten milliSieverts per year is 2.7 MicroSieverts per day or 0.114 microSieverts per hour.

In the same face sheet, the average US background radiation is 310 millirem per year or 3.1 milliseiverts per year or 0.85 microseiverts per days or 0.04 microseiverts per hour. Now for school children in Fukushima the goal is 1 milliseivert per year, 0.27 microseiverts per day or 0.01 microseiverts per hour.

Explaining the real impact of radiation to parents, after a nuclear accident, is not something I envy. But if the Japanese can reduce radiation exposure in Fukushima Prefecture to 1 tenth the background of Denver, Colorado and less than one third the normal background radiation in the United States, more power to them.

The Japan Times had the same story. Between the two sources there appears to be some confusion. By using 1 millisievert per year the Japanese nuclear regulators appears to be sticking with the US NRC guideline of 1 millisievert per year over normal background. Perfectly reasonable under most situation, but a bit overly conservative for Fukushima Prefecture. Their proposed target of 10 mSv per year is much more realistic and proven safe by studies of Denver, Colorado.

For concerned parents, the 20 mSv per year upper limit may be high from a comfort level view, but there is no indication that it is unsafe. Adult nuclear energy works have a 50 mSv per year limit with no ill effects and less than half that limit should not pose a problem for children. The only question really for children is infants, which have not been a large test group for obvious reasons.

Potatoes from Japan are in the news with low levels of radiation. The difference in radiation standards will continue to rear its ugly hear. In the Thai article, sweet potatoes were test at nearly 16 Becquerel per kilogram which is well be low the Thai limit of 100 Bq/kg. Japan has a limit of 500 Bq/kg for most produce. The arbitrary limits varying between nations should be realistically addressed.

While Bananas and Brazil nuts are fairly commonly known to have radiation levels, potatoes are also a background radiation contributor, with levels of 125 Bq/kg not uncommon. Hypersensitivity to radiation after an incident is common and can only be combated with education before an incident. Now Thailand is planning to destroy perfectly safe sweet potatoes once they determine a safe means to destroy a safe food. Yes, it is a little stupid, but every nation has to deal with their lack of proper standards and public trust.

The new tuber terror has spurred the media to look up reports on the potential for potatoes to absorb radiation as they grow. None of the reports I have seen mention that adding potassium fertilizer to the soil decreases the amount of radioactive Cesium 137 the potatoes absorb, much like iodine tables reduce the human bodies absorption of radioactive iodine.

It would nice if some trusted, if there is one, government agency published the average radiation levels of foods and recommended radiation limits so more countries could get on the same page.

In past posts here, I have mentioned this problem and the need for more realistic standards for radiation. Despite what anti-nuclear groups say, there is solid scientific evidence that low dosages of ionizing radiation are not harmful. I have even given reasonable standards based on areas of the world with much higher background radiation and limits that are applicable to workers in nuclear technologies. Thanks to science fiction, uneducated anti-nuclear activists and government agencies of questionable competence, there will continue to be needless confusion on what is allowable without detectable increase in health risk.

"Although radiation may cause cancers at high doses and high dose rates, currently there are no data to establish unequivocally the occurrence of cancer following exposure to low doses and dose rates – below about 10,000 mrem (100 mSv). Those people living in areas having high levels of background radiation – above 1,000 mrem (10 mSv) per year – such as Denver, Colorado, have shown no adverse biological effects." The above is taken from the US Nuclear Regulatory Commission radiation fact sheet. Ten milliSieverts per year is 2.7 MicroSieverts per day or 0.114 microSieverts per hour.

In the same face sheet, the average US background radiation is 310 millirem per year or 3.1 milliseiverts per year or 0.85 microseiverts per days or 0.04 microseiverts per hour. Now for school children in Fukushima the goal is 1 milliseivert per year, 0.27 microseiverts per day or 0.01 microseiverts per hour.

Explaining the real impact of radiation to parents, after a nuclear accident, is not something I envy. But if the Japanese can reduce radiation exposure in Fukushima Prefecture to 1 tenth the background of Denver, Colorado and less than one third the normal background radiation in the United States, more power to them.

The Japan Times had the same story. Between the two sources there appears to be some confusion. By using 1 millisievert per year the Japanese nuclear regulators appears to be sticking with the US NRC guideline of 1 millisievert per year over normal background. Perfectly reasonable under most situation, but a bit overly conservative for Fukushima Prefecture. Their proposed target of 10 mSv per year is much more realistic and proven safe by studies of Denver, Colorado.

For concerned parents, the 20 mSv per year upper limit may be high from a comfort level view, but there is no indication that it is unsafe. Adult nuclear energy works have a 50 mSv per year limit with no ill effects and less than half that limit should not pose a problem for children. The only question really for children is infants, which have not been a large test group for obvious reasons.

Potatoes from Japan are in the news with low levels of radiation. The difference in radiation standards will continue to rear its ugly hear. In the Thai article, sweet potatoes were test at nearly 16 Becquerel per kilogram which is well be low the Thai limit of 100 Bq/kg. Japan has a limit of 500 Bq/kg for most produce. The arbitrary limits varying between nations should be realistically addressed.

While Bananas and Brazil nuts are fairly commonly known to have radiation levels, potatoes are also a background radiation contributor, with levels of 125 Bq/kg not uncommon. Hypersensitivity to radiation after an incident is common and can only be combated with education before an incident. Now Thailand is planning to destroy perfectly safe sweet potatoes once they determine a safe means to destroy a safe food. Yes, it is a little stupid, but every nation has to deal with their lack of proper standards and public trust.

The new tuber terror has spurred the media to look up reports on the potential for potatoes to absorb radiation as they grow. None of the reports I have seen mention that adding potassium fertilizer to the soil decreases the amount of radioactive Cesium 137 the potatoes absorb, much like iodine tables reduce the human bodies absorption of radioactive iodine.

It would nice if some trusted, if there is one, government agency published the average radiation levels of foods and recommended radiation limits so more countries could get on the same page.

Saturday, May 28, 2011

The Sky Has a Temperature!

While many on the web are discussing the boring legalities of scientific endeavor, I have been pondering the humorous contradictions facing certain political activists and how best to explain the greenhouse effect.

One of the better explanations is on Science of Doom in twelve plus parts. While the blogger does an excellent job, I have to admit I have dozed off a few times and found what I was awake for maybe a little complex for the average reader.

Many people still find it difficult to understand how a small trace of carbon dioxide, 390 parts per million, could change climate. It is a matter of scale which I have pounded on from time to time. So since I really don't want to work on the motorhome on this beautiful weekend, explaining climate change sounds like a good diversion. The sky has a temperature. There how was that? Maybe a little more detail huh?

Well, I can expand a bit, but that is the main point. Considering only the night sky, the sky has a temperature and the reason it has a temperature is that the gases that make up the atmosphere have a temperature. For about 20 bucks you can buy a cheap non-contact thermometer and check it out for yourself. The non-contact thermometer is an infrared temperature sensor. It determines temperature based on infrared radiation intensity. Just point it at the sky, pull the trigger and you get a temperature reading. On a cloudy night it is easy to see that the clouds are warmer than the clear sky patches. Depending on how good the thermometer is and how clear the sky is you get pretty big temperature differences.

Down here in the humid tropics, the clear sky may be -40 degrees and the clouds -10 degrees. It doesn't matter if the temperature is in C or F because the accuracy of the cheap non-contact thermometer is not all that great anyway. You will measure a difference though.

What throws most people off is that they can see clouds and they don't see anything in the clear sky. That clear sky is the important part though, even at -40 degrees it has a temperature much greater than the empty space beyond it.

The carbon dioxide is a fairly well mixed gas. Water vapor is not so well mixed. So while water vapor may make up as much as 4% of the local atmosphere, in a clear, dry sky is makes up much less, say 0.5 percent. 390 parts per million, 0.000390, is a small number, but then so is 0.5% or 0.005. At this water vapor concentration, CO2 makes up 7.8% of the greenhouse gas in the sky neglecting the other more rare greenhouse gases. As far as our matter of scale, 7.8% is significant. To simplify things, whenever CO2 has an impact of greater than 1% of the total greenhouse gases, it is significant.

Back at the start of the 20th century, CO2 was about 280 parts per million, so under these same conditions, CO2 would have been about 5.6% of the greenhouse gas concentration in a 0.5 percent water vapor sky. As long as there are greenhouse gases in the atmosphere the sky will have a temperature greater than it would if they were not there.

If there were no greenhouse gases, the temperature of the sky would be about 33 degrees C lower. That is not a hard and fast number. It would vary depending on the initial temperature of the surface under that portion of the sky and the thermal mass of the surface. Water has a high thermal mass, so it takes longer to heat and cool, so it would be warmer if the sky is over the ocean than over a sandy desert for example. Then since the ocean is water, there would be water vapor which is a greenhouse gas, so the 33 degree number is more of a concept than a real situation.

Understanding why the 33 degree number is a concept is kind of important. As long as there is water on the planet there will be water vapor. Water vapor is the dominate greenhouse gas because of the amount of water on the planet, so CO2 plays a limited role in limited regions of the atmosphere. The drier the air, the greater its role.

The physics behind the greenhouse effect is really not that complicated. The shape of the greenhouse gases molecules is different than the other diatomic gas molecules in the atmosphere. N2 nitrogen, O2 oxygen make up nearly 99 percent of the atmosphere. All the trace greenhouse gases have more than two atoms per molecule. Since the greenhouse gas molecules are not symmetrical, they behave differently when excited than the diatomic molecules. They are excited by infrared heat or radiation, while the diatomic molecule not so much. Once excited by infrared or collisions with other molecules, they gain heat energy which they can pass along to any other molecule or lose to space. The closer they are to the surface when excited the more heat the surface can retain. If the greenhouse gases molecules are high in the atmosphere, heat is retained, but has less impact on the surface.

Some will have a problem with my word "retained". I like it because retained does not have a fixed time associated with it. You can retain your earnings for a long time or spend it quickly, but it was still retained because you had the option to use it. Once you understand that greenhouse gases help retain heat, you can look at the significance of scale of the individual greenhouse gases to understand the relative impact they have.

It is pretty easy to understand that the higher the sky's temperature the more heat is retained, the warmer the surface can be. If you set a cold beer on a picnic table in the winter is will stay cold longer than it would in the summer. If you are not into beer, you could used a cup of hot coffee, which would stay hot longer in the summer than it would in the winter. The rate of heat flow depends on the temperature difference. If the sky is colder, the surface gets colder faster than when the sky is warmer. Heat flows from hot to cold and flows faster the bigger the difference between the hot and the cold.

The greenhouse effect is not rocket science, it should be easier for most to understand if people explaining it would stick to the basics and matters of scale.

Explaining how much an increase from 280 parts per million to 390 or 560 parts per million will increase the sky's temperature is a touch more complicated.

One of the better explanations is on Science of Doom in twelve plus parts. While the blogger does an excellent job, I have to admit I have dozed off a few times and found what I was awake for maybe a little complex for the average reader.

Many people still find it difficult to understand how a small trace of carbon dioxide, 390 parts per million, could change climate. It is a matter of scale which I have pounded on from time to time. So since I really don't want to work on the motorhome on this beautiful weekend, explaining climate change sounds like a good diversion. The sky has a temperature. There how was that? Maybe a little more detail huh?

Well, I can expand a bit, but that is the main point. Considering only the night sky, the sky has a temperature and the reason it has a temperature is that the gases that make up the atmosphere have a temperature. For about 20 bucks you can buy a cheap non-contact thermometer and check it out for yourself. The non-contact thermometer is an infrared temperature sensor. It determines temperature based on infrared radiation intensity. Just point it at the sky, pull the trigger and you get a temperature reading. On a cloudy night it is easy to see that the clouds are warmer than the clear sky patches. Depending on how good the thermometer is and how clear the sky is you get pretty big temperature differences.

Down here in the humid tropics, the clear sky may be -40 degrees and the clouds -10 degrees. It doesn't matter if the temperature is in C or F because the accuracy of the cheap non-contact thermometer is not all that great anyway. You will measure a difference though.

What throws most people off is that they can see clouds and they don't see anything in the clear sky. That clear sky is the important part though, even at -40 degrees it has a temperature much greater than the empty space beyond it.

The carbon dioxide is a fairly well mixed gas. Water vapor is not so well mixed. So while water vapor may make up as much as 4% of the local atmosphere, in a clear, dry sky is makes up much less, say 0.5 percent. 390 parts per million, 0.000390, is a small number, but then so is 0.5% or 0.005. At this water vapor concentration, CO2 makes up 7.8% of the greenhouse gas in the sky neglecting the other more rare greenhouse gases. As far as our matter of scale, 7.8% is significant. To simplify things, whenever CO2 has an impact of greater than 1% of the total greenhouse gases, it is significant.

Back at the start of the 20th century, CO2 was about 280 parts per million, so under these same conditions, CO2 would have been about 5.6% of the greenhouse gas concentration in a 0.5 percent water vapor sky. As long as there are greenhouse gases in the atmosphere the sky will have a temperature greater than it would if they were not there.

If there were no greenhouse gases, the temperature of the sky would be about 33 degrees C lower. That is not a hard and fast number. It would vary depending on the initial temperature of the surface under that portion of the sky and the thermal mass of the surface. Water has a high thermal mass, so it takes longer to heat and cool, so it would be warmer if the sky is over the ocean than over a sandy desert for example. Then since the ocean is water, there would be water vapor which is a greenhouse gas, so the 33 degree number is more of a concept than a real situation.

Understanding why the 33 degree number is a concept is kind of important. As long as there is water on the planet there will be water vapor. Water vapor is the dominate greenhouse gas because of the amount of water on the planet, so CO2 plays a limited role in limited regions of the atmosphere. The drier the air, the greater its role.

The physics behind the greenhouse effect is really not that complicated. The shape of the greenhouse gases molecules is different than the other diatomic gas molecules in the atmosphere. N2 nitrogen, O2 oxygen make up nearly 99 percent of the atmosphere. All the trace greenhouse gases have more than two atoms per molecule. Since the greenhouse gas molecules are not symmetrical, they behave differently when excited than the diatomic molecules. They are excited by infrared heat or radiation, while the diatomic molecule not so much. Once excited by infrared or collisions with other molecules, they gain heat energy which they can pass along to any other molecule or lose to space. The closer they are to the surface when excited the more heat the surface can retain. If the greenhouse gases molecules are high in the atmosphere, heat is retained, but has less impact on the surface.

Some will have a problem with my word "retained". I like it because retained does not have a fixed time associated with it. You can retain your earnings for a long time or spend it quickly, but it was still retained because you had the option to use it. Once you understand that greenhouse gases help retain heat, you can look at the significance of scale of the individual greenhouse gases to understand the relative impact they have.

It is pretty easy to understand that the higher the sky's temperature the more heat is retained, the warmer the surface can be. If you set a cold beer on a picnic table in the winter is will stay cold longer than it would in the summer. If you are not into beer, you could used a cup of hot coffee, which would stay hot longer in the summer than it would in the winter. The rate of heat flow depends on the temperature difference. If the sky is colder, the surface gets colder faster than when the sky is warmer. Heat flows from hot to cold and flows faster the bigger the difference between the hot and the cold.

The greenhouse effect is not rocket science, it should be easier for most to understand if people explaining it would stick to the basics and matters of scale.

Explaining how much an increase from 280 parts per million to 390 or 560 parts per million will increase the sky's temperature is a touch more complicated.

Friday, May 27, 2011

Fonda versus Nadar - Clash of the Liberal Icons

What makes people tick interests me. Liberals in the United States are big hearted, good natured and totally gullible. Penn and Teller had fun with the environmentalists years ago with the Di-Hydrogen Monoxide skit.

Recently, Ralph Nadar has published a list of the top 12 cancer-causing products in your home. Crystalline Silica, sand, is one of the prominent carcinogens included in the dangerous products. This is leading to a confusing situation for loyal liberals. Liberal Icon, Jane Fonda is a commercial spokesperson for L'Oreal cosmetics. One of the many carcinogenic ingredients in L'Oreal products is silica. What's a liberal to do?

"Lanolin Oil , Sesame Seed Oil , Oleyl Erucate , Microcrystalline Wax , PPG-5 Lanolin Wax , Acetylated Lanolin , Beeswax , Disteardimonium Hectorite , Fragrance , Tocopheryl Acetate , Rosa Canina Fruit Oil , Arginine PCA , Jojoba Seed Oil , Benzyl Alcohol , Silica , BHT , BHA , Calcium Sodium Borosilicate , Calcium Aluminum Borosilicate , Synthetic Fluorphlogopite , Polyethylene Terephthalate , Polymethyl Methacrylate , Iron Oxides CI 77492-CI 77499-CI 77491 , Mica , Titanium Dioxide CI 77891 , Red 7 CI 15850 , Red 28 Lake CI 45410 , Yellow 6 Lake CI 15985 , Carmine CI 75470 , Red 22 Lake CI 45380 , Yellow 5 Lake CI 19140 , Blue 1 Lake CI 42090 "

This list of ingredients for Hip lip stick is fortunately not easily accessible for most liberal, female consumers. If it were, the sassy, sexy liberal spokes model would be a thing of the past.

Recently, Ralph Nadar has published a list of the top 12 cancer-causing products in your home. Crystalline Silica, sand, is one of the prominent carcinogens included in the dangerous products. This is leading to a confusing situation for loyal liberals. Liberal Icon, Jane Fonda is a commercial spokesperson for L'Oreal cosmetics. One of the many carcinogenic ingredients in L'Oreal products is silica. What's a liberal to do?

"Lanolin Oil , Sesame Seed Oil , Oleyl Erucate , Microcrystalline Wax , PPG-5 Lanolin Wax , Acetylated Lanolin , Beeswax , Disteardimonium Hectorite , Fragrance , Tocopheryl Acetate , Rosa Canina Fruit Oil , Arginine PCA , Jojoba Seed Oil , Benzyl Alcohol , Silica , BHT , BHA , Calcium Sodium Borosilicate , Calcium Aluminum Borosilicate , Synthetic Fluorphlogopite , Polyethylene Terephthalate , Polymethyl Methacrylate , Iron Oxides CI 77492-CI 77499-CI 77491 , Mica , Titanium Dioxide CI 77891 , Red 7 CI 15850 , Red 28 Lake CI 45410 , Yellow 6 Lake CI 15985 , Carmine CI 75470 , Red 22 Lake CI 45380 , Yellow 5 Lake CI 19140 , Blue 1 Lake CI 42090 "

This list of ingredients for Hip lip stick is fortunately not easily accessible for most liberal, female consumers. If it were, the sassy, sexy liberal spokes model would be a thing of the past.

Thursday, May 26, 2011

The Price of Fame - The New Legalities of Science

Scientists publish papers all the time, that's their job, publish or perish. Ground breaking papers published in big name journal rarely are really ground breaking. Most have some flaw that under more scrutiny is revealed that may lead to something or may not. If the work appears to be truly ground breaking, the scientist or team receive notoriety. If the paper is perceived to be ground breaking, touted as a new revelation, then found to be flawed, the fun begins.

The price, especially when the scientist or his work may sway policy, is law suits. There are a lot of law suits flying around in climate change right now. Many of the scientists involved are not accustom to the reality of fame, so their learning is entertaining and often very funny.

Micheal Mann, of IPCC "Hockey Stick" fame is currently center stage. He now works at the Penn State University. Most sports fans know the old Penn State, State Pen joke. Mann has a pending law suit with a Canadian over his character being defamed because of that joke. Mann also worked at the University of Virginia which is now being taken to court to release internal documents and e-mails related to Mann requested under the Freedom of Information Act.

A report critical of Mann's methods was withdrawn due to plagiarism. This report was authored by a student of a Doctor Wedgman who testified before the US Congress that the Mann "Hockey Stick" was flawed and the peer review process tainted by the limited number of experts in the field for reviewers. "Pal Review", as it has come to be known is letting less than stellar work slip through to the big name publications. That is the allegation anyway.

So now the internet is really buzzing with various blogs taking up positions. I have written my own opinion on the poor statistical choices of Dr. Mann. To error is human, so I am not one to kick a Mann down, but Mann is a serial offender. His confidence in his novel statistical insight is surprisingly intact despite many reminders that his conclusions are not very skillful. It is Mann's poor choices that have lead to calls for all climate science publication to be reviewed by third party statisticians.

Oddly, a great deal of climate scientists publicly defend Mann's less than stellar work. This is opening a huge can of worms.

Everyone knows that public officials have less protection from defamation laws in most countries, especially the United States. Public figures have more legal protection until they attempt to influence policy, which makes them legally on par with public officials. So the whole defamation games changes.

One of the liberal bloggers, Deep Climate, pressed hard to discredit the paper on Micheal Mann's "pal Review" process. The plagiarism issue championed by the liberal climate science community now opens the door wider to scrutinize their ethics. Tit for tat. One example of obvious plagiarism by the liberal side is the Menne et al paper that stole the work in progress of conservative blogger Anthony Watts. Watts and Menne came to an agreement so that the stole work was property attributed after the fact. While Watts may be satisfied, the plagiarism by employees of the United States Government of a private turned public citizen can lead to the mother of all federal cases. Menne et al committed a crime. Not a devastating crime, one that would not even require a written reprimand. However, the potential conspiracy to commit even a minor crime could be a much greater crime. "Pal Review" can easily become conspiracy which can be an extremely nasty legal situation.

Micheal Mann's poor statistical choices, since they were repeated in his following works and a co-authored paper with Dr. Eric Stieg, could be considered a conspiracy to improperly influence public policy. By gaining the level of fame they have and attempting to sway policy, their lives are no longer private.

So I predict, that the scat has not finished hitting the fan. No matter their intentions, quite a few climate scientists are about to enter the litigation zone.

The price, especially when the scientist or his work may sway policy, is law suits. There are a lot of law suits flying around in climate change right now. Many of the scientists involved are not accustom to the reality of fame, so their learning is entertaining and often very funny.

Micheal Mann, of IPCC "Hockey Stick" fame is currently center stage. He now works at the Penn State University. Most sports fans know the old Penn State, State Pen joke. Mann has a pending law suit with a Canadian over his character being defamed because of that joke. Mann also worked at the University of Virginia which is now being taken to court to release internal documents and e-mails related to Mann requested under the Freedom of Information Act.

A report critical of Mann's methods was withdrawn due to plagiarism. This report was authored by a student of a Doctor Wedgman who testified before the US Congress that the Mann "Hockey Stick" was flawed and the peer review process tainted by the limited number of experts in the field for reviewers. "Pal Review", as it has come to be known is letting less than stellar work slip through to the big name publications. That is the allegation anyway.

So now the internet is really buzzing with various blogs taking up positions. I have written my own opinion on the poor statistical choices of Dr. Mann. To error is human, so I am not one to kick a Mann down, but Mann is a serial offender. His confidence in his novel statistical insight is surprisingly intact despite many reminders that his conclusions are not very skillful. It is Mann's poor choices that have lead to calls for all climate science publication to be reviewed by third party statisticians.

Oddly, a great deal of climate scientists publicly defend Mann's less than stellar work. This is opening a huge can of worms.

Everyone knows that public officials have less protection from defamation laws in most countries, especially the United States. Public figures have more legal protection until they attempt to influence policy, which makes them legally on par with public officials. So the whole defamation games changes.

One of the liberal bloggers, Deep Climate, pressed hard to discredit the paper on Micheal Mann's "pal Review" process. The plagiarism issue championed by the liberal climate science community now opens the door wider to scrutinize their ethics. Tit for tat. One example of obvious plagiarism by the liberal side is the Menne et al paper that stole the work in progress of conservative blogger Anthony Watts. Watts and Menne came to an agreement so that the stole work was property attributed after the fact. While Watts may be satisfied, the plagiarism by employees of the United States Government of a private turned public citizen can lead to the mother of all federal cases. Menne et al committed a crime. Not a devastating crime, one that would not even require a written reprimand. However, the potential conspiracy to commit even a minor crime could be a much greater crime. "Pal Review" can easily become conspiracy which can be an extremely nasty legal situation.

Micheal Mann's poor statistical choices, since they were repeated in his following works and a co-authored paper with Dr. Eric Stieg, could be considered a conspiracy to improperly influence public policy. By gaining the level of fame they have and attempting to sway policy, their lives are no longer private.

So I predict, that the scat has not finished hitting the fan. No matter their intentions, quite a few climate scientists are about to enter the litigation zone.

Wednesday, May 25, 2011

Cooling Pipe Breach Possibly Caused by Earthquake - Fukushima

The Japan Times has an article reporting that TEPCO is now saying the Cooling Pipe Breach Now Laid on Tremblor. I doubted that the meltdown caused a penetration in the reactor pressure vessels, this makes more sense, but still could use a little more detail.

The Japan Times is an English speaking newspaper/website with news from Japan. I don't peak Japanese, so I have to rely on such sources, but cannot be sure of their accuracy.

Since the reactor was not quite designed for the magnitude of the mega earthquake, it is possible that the seismic force damaged the cooling pipes. Piping design for earthquake conditions is pretty good, so I still have some doubts. While the article mentions that overheating aggravated the situation and enlarged the leak, the timing leads me to believe that thermal shock, the over heating then inadequate cooling, may have been the major cause. Of course I cannot know for sure and there are plenty of experts out there, but things are starting to make more sense.

The leak may complicate TEPCO's plans for cold shut down, but since they already knew there was a leak, I doubt it will overly complicate things. It will make it more difficult to bring the other undamaged reactors back online, but that is not a practical idea under the circumstances anyway.

If the damage was indeed due to the earthquake, then the status of the other Japanese reactors does not look good. Since it may take years to determine exactly what happened, it is unlikely that the older reactors in Japan will be operated any time soon due to concerns of another megaquake. That is one of the odd things about human logic. Megaquakes relieve a great deal of pressure reducing the odds of a new earthquake of that magnitude in the same general area for many decades. Shutting down the other reactors for fear of another major earthquake now is like closing the door after the horse has run off then burning down the barn because it let the horse out.

The Japan Times is an English speaking newspaper/website with news from Japan. I don't peak Japanese, so I have to rely on such sources, but cannot be sure of their accuracy.

Since the reactor was not quite designed for the magnitude of the mega earthquake, it is possible that the seismic force damaged the cooling pipes. Piping design for earthquake conditions is pretty good, so I still have some doubts. While the article mentions that overheating aggravated the situation and enlarged the leak, the timing leads me to believe that thermal shock, the over heating then inadequate cooling, may have been the major cause. Of course I cannot know for sure and there are plenty of experts out there, but things are starting to make more sense.

The leak may complicate TEPCO's plans for cold shut down, but since they already knew there was a leak, I doubt it will overly complicate things. It will make it more difficult to bring the other undamaged reactors back online, but that is not a practical idea under the circumstances anyway.

If the damage was indeed due to the earthquake, then the status of the other Japanese reactors does not look good. Since it may take years to determine exactly what happened, it is unlikely that the older reactors in Japan will be operated any time soon due to concerns of another megaquake. That is one of the odd things about human logic. Megaquakes relieve a great deal of pressure reducing the odds of a new earthquake of that magnitude in the same general area for many decades. Shutting down the other reactors for fear of another major earthquake now is like closing the door after the horse has run off then burning down the barn because it let the horse out.

Monday, May 23, 2011

Irradiated Food - What's the Fuss?

Following the situation in Japan I have run across a few cultural curiosities. Japanese culture is steeped in tradition, but it ain't my tradition so I find somethings odd as all hell. I love Japanese food, but some things I do not even think of trying. Natto is one. It is fermented soya beans. It smells like toe jam. I love blue cheese. It doesn't smell much better to the average Japanese. So I guess that is a draw.

Raw beef?. Hey, I happen to like some beef raw, but I make it myself just to make sure I don't get sick. The Japanese are pretty partial to raw beef also. A few people died in Japan recently due to bad raw beef in a restaurant. It is a price too high to pay for good eats.

Today is much different than when I was a kid. We had real neighborhood butchers and locally raised meats and vegetables. You hardly ever heard of someone getting food poisoning. Today with everything mass processed and brought in from who knows where, all too often something is in the news about food contamination. It is a problem that doesn't need to be a problem.

Irradiated foods, foods treated with ionizing radiation, have very little chance of causing most common food poisonings. The radiation treatment prolongs shelf life, keeps natural food coloring and best of all, allow you to safely have hamburgers medium rare without having to grind the beef yourself. Only one problem. I can't get it because people would rather die than eat something they think may be bad for them.

There are a lot of very smart sounding people that claim irradiated foods are a cop out. That they have a better way, grass feed, free range, organic... Other than grow it yourself, as in me (I don't trust a lot of people that think they know how to farm) there is not much you can do, other than irradiation.

Organic is great. I steal stuff from my neighbors organic garden all the time. Personally, I know that used properly, there are excellent fertilizers and even pesticides (gasp) that help grow perfectly healthy foods. The rub is only certain things grow at certain times of the year. Also, I found out that my neighbors were not all that thrilled with me free ranging my chickens. I even had brown eggs beat, my hens laid pastel green eggs. The fresh eggs were great though and chickens will keep a yard nearly bug free. Little piles of yet to be composted chicken matter was a little problematic.

My good ol' days have past. Now I have to deal with someone else's good ol' days which unfortunately includes Salmonella, E. Coli and soon to be named other weird nasty junk. Why not cut me a little slack and at least read about irradiated foods written by someone that actually knows about irradiated foods? If you are capable of being open minded, you might find the objections humorous.

Raw beef?. Hey, I happen to like some beef raw, but I make it myself just to make sure I don't get sick. The Japanese are pretty partial to raw beef also. A few people died in Japan recently due to bad raw beef in a restaurant. It is a price too high to pay for good eats.

Today is much different than when I was a kid. We had real neighborhood butchers and locally raised meats and vegetables. You hardly ever heard of someone getting food poisoning. Today with everything mass processed and brought in from who knows where, all too often something is in the news about food contamination. It is a problem that doesn't need to be a problem.

Irradiated foods, foods treated with ionizing radiation, have very little chance of causing most common food poisonings. The radiation treatment prolongs shelf life, keeps natural food coloring and best of all, allow you to safely have hamburgers medium rare without having to grind the beef yourself. Only one problem. I can't get it because people would rather die than eat something they think may be bad for them.

There are a lot of very smart sounding people that claim irradiated foods are a cop out. That they have a better way, grass feed, free range, organic... Other than grow it yourself, as in me (I don't trust a lot of people that think they know how to farm) there is not much you can do, other than irradiation.

Organic is great. I steal stuff from my neighbors organic garden all the time. Personally, I know that used properly, there are excellent fertilizers and even pesticides (gasp) that help grow perfectly healthy foods. The rub is only certain things grow at certain times of the year. Also, I found out that my neighbors were not all that thrilled with me free ranging my chickens. I even had brown eggs beat, my hens laid pastel green eggs. The fresh eggs were great though and chickens will keep a yard nearly bug free. Little piles of yet to be composted chicken matter was a little problematic.

My good ol' days have past. Now I have to deal with someone else's good ol' days which unfortunately includes Salmonella, E. Coli and soon to be named other weird nasty junk. Why not cut me a little slack and at least read about irradiated foods written by someone that actually knows about irradiated foods? If you are capable of being open minded, you might find the objections humorous.

Radiation Safety Levels - The Moving Target

I have been curious about nuclear power and its potential problems for a long time. In the early days of the atom, it was touted as the new miracle energy that would put everyone on easy street. As with most things so new and wonderful, I have grown to have my doubts, not only about the wonderful promises of life changing improvements, but the doom of life changing disasters.

If a little is good, a lot has to be great, seems to be the cause of most of man kinds problems. Things that are used as directed tend to be fine in most cases, it is when we use too much of a good thing we get in trouble. That seems to be the main problem with nuclear energy.

Nuclear is scary and expensive. Because of that, governments and utilities try to cram too much in small spaces to compromise the fear and cost factors. Then if something goes wrong any is too much. Maybe people will never understand to read directions before use.

Since the situation in Fukushima started, I have spent more time than I should trying to put things in perspective. The nuclear problem is mainly due to trying to get too much out of the Fukushima power plant. At the time the plants were built, they produced huge amounts of energy for the time, with not a great deal of efficiency. The fuel when Fukushima was first built was fairly inexpensive for the time, the safety requirements were the main cost. The game plan to build bigger may have sounded great, but was not all that bright. Then to try to get the plants to produce as long as possible was not too bright either. This is not just a Japanese thing.

Too big is the main problem. While the technology of nuclear power in not difficult to understand in today's world, we still seem to be befuddled by it. More nuclear fuel takes longer to shut down than less. There are pretty simple calculation that you can make to see how much trouble you want to deal with in controlling nuclear power. In the old style reactors, the gross energy is three times the net energy produced. The emergency shut down energy is 7% of the gross and decreases rapidly to 1.5% of the gross over the next 24 hours. To safely shutdown a reactor you have to be able to deal with the 7% quickly and the 1.5% as it decreases for a long time. If you can't deal with it, then there will be a big loss of investment. You have trashed an expensive investment, loss a large portion of you electrical base load, scared the hell out of millions of people and have to pay damages to all the people that have had to change their daily lives to accommodate your screw up. To reduce the impact once the mega plant has been built, you have to use what the design and situation allows to control the damage.

The Japanese dealt with Fukushima as best they could under the conditions. Most people have great hind sight, so it is easy for folks sitting a home to point out all the things that could have been do better. Many say the best solution was to not get into that situation to begin with. I am not particularly better than most at arm chair quarterbacking, but there are a few things I think I have learned from Fukushima.

The first thing I notice is that the older the plant, the more you have to plan for things going wrong. Planning for each and every thing that happened is close to impossible. You can plan for the absolute worst case. That would be that the plant never shutdown and there was no water, no load, no nothing to stop things from going bad.

In a worst case, the worst thing is having to evacuate a sizable population. The area to be evacuated is dependent on the potential amount and spread of radiation. No matter how well the nuclear plant is designed, the potential fallout is has to be considered. Twenty to thirty kilometers is normal for a nuclear power plant of Fukushima's size. That area appears to be based on the size of the individual reactors not the total number of reactors. It takes energy to spread enough radiation and it is the individual reactor energy that would determine the average potential radiation spread. There reactors may spread more radiation, but the distance would be close to the same as the single largest reactor. In terms of human lives and livelihood, the most pressing safety concern, that evacuation area should be minimized.

Time and money can solve most any problem related to a nuclear power plant accident, except for the human element. Smaller individual reactors even with many more installed on a site, reduces the potential impact on lives.

Better understanding of the impact of radiation fallout can also reduce the evacuation area. I have noticed that I am not a part of as small a group as I was ten years ago. Advances in nuclear medicine have educated many more people to the truth of radiation. While it is still dangerous, the human body can tolerate a lot more than previously thought. We have even learned over the past 30 years that every day we are exposed to more naturally than we would have ever imagined. While many radioactive isotopes are no longer common in nature, they have similar isot0pes that are and we are continuously exposed to in varying amounts.

Most of my curiosity about radiation started years ago with the "discovery" of radon levels in homes. I used to test indoor air quality and I avoided jumping on the radon testing bandwagon. The health impact of low levels of radon were nothing compared to common molds. Water in homes where water is not supposed to be, is the primary cause of indoor air pollution followed by out gassing of volatile organic compounds. Knowing this, I have little problem living near a nuclear power plant but would be adamantly opposed to living near a chemical plant. I would have days or weeks to evacuate from a nuclear incident, but could be dead before the alarms sounded if I lived in Bopal, India, for example. Long term exposure to low levels of toxic chemical can have a much more devastating impact than low levels of radiation.

Safe use of nuclear energy demands knowing the potential for harmful levels of fallout in a worse case and understanding what levels are truly harmful. The first is known and can be reduced. The second is the bugger. Japan made a mistake by not establishing more realistic standards of harmful radiation levels. They had to revise maximum levels from their unrealistic standards to more realistic levels while a nuclear situation was in progress. That creates mistrust and that trust may never be regained.

The US Environmental Protection Agency is on the same poor path. They are imposing irresponsible maximum standards that are fractions of the standard guidelines of the US Nuclear Regulatory Commission which are themselves very conservative. For example, the Yucca Mountain National nuclear waste storage facility has EPA limits of 15 Millirem per year above background. 15 millirem per year is 1.7 microrem per hour equivalent to 17 nanosieverts per hour or 0.017 microsieverts per hour. One thousand times that, 1.7 microsieverts per hour, or 14.9 millisieverts per year is about one quarter the radiation exposure limit for people employed in nuclear medicine. With the EPA standard, people living in the area are exposed to more radiation if they have two smoke detectors in their homes (37 kiloBecquerel per detector). Think about that. 37,000 Becquerel per smoke detector. Of course, Becquerel is not directly convertible to Sieverts unless ingested, still it is an illuminating comparison.

Radiation is a part of our lives, isn't it time to start trying to better understand it?

Saturday, May 21, 2011

Japan's Radioactive Tea

A type of tea in Japan has been found to be contaminated with Cesium 137. The Japanese governments limit for radiation of the tea is 500 Becquerels per kilogram. Since tea is pretty important in Japanese culture, some citizens are wondering if the limit is really needed. The Japan Probe post on Radioactive Tea in Japan gives a good account of the situation. One of the main issues is tea is dried, steeped then drank, but the Japanese regulation is for un-dried tea like it is a vegetable to be eaten.

The unit Becquerel means one disintegration per second. In the USA we use the unit Curries were one Currie is equal to 37 million disintegrations per second. A disintegration is a pop or count on a Geiger counter. For the average tea drinker this is pretty scary and most have know clue it the tea is really bad for them since they have know clue what a Becquerel means health wise.

The Nuclear Regulatory Commission in the United States came up with the Banana Dose to help people understand radiation. The Banana Dose (BD) is considered to be 520 picocurries. Using a handy dandy converter a BD is 19 Becquerels. So 500 Bequerels is a bunch of bananas. Since most people don't eat tea fresh, it is dried first which decreases its weight which would increase the radiation per weight. But only a small amount of dried tea leaves are used at a time and not all the radiation may be dissolved in the tea after steeping. Interesting puzzle.

After drying, tea weighs about 15% of what it did straight off the tree. So a kilogram of dried tea that tested at 500 becquerel per kilogram fresh, would test roughly 3333 becquerel per kilogram dried. The average strong tea bag contains roughly 3.3 grams per Wikipedia, so a mug of radioactive tea would contain about 11 Becquerels. What I am calling a mug is about 1/4 liter. So one liter of radioactive tea would be about 44 Becquerels worth of radiation, if all of the radiation in the tea leaves dissolved in the water. For infants, the Japanese limit per liter of water for radiation is 100 becquerels and adults 300. So at first blush, it does not look like the 500 Becquerel limit for fresh tea leaves is all that reasonable. One thousand Becquerels per kilogram of fresh tea would bring the per liter of consumed tea to 88 Becquerels, which should be safe even for infants if it were water, milk, juices or any other liquid.

I just used rough numbers, but it looks like the tea should be pretty safe. Since the 100 Becquerel limit is very conservative to begin with, I am pretty sure it is safe at 1000 Becquerels per kilogram of fresh tea leaves. I would think the Japanese government would test the tea as it is used before setting a weird limit on a product unless there is a common food use for fresh tea leaves. It would also be nice if they used the banana dose equivalent more when describing the safety of food stuffs.

It would also be interesting to know if vegetables were tested after rinsing. Most radioactive isotopes wash into the soil. Some are taken in by growing plants, but the majority remains in the soil. Cesium is absorb in the human body similarly to potassium. According some of the sources I have read, most of the cesium ingested passes through the body in about 100 days. Since it is chemically similar to potassium and sodium, that makes sense, but more could be retained.

While Fukushima is a dangerous mess, there is plenty to be learned from the misfortune of the Japanese people. Hopefully, a better understanding of ionizing radiation will be one of the lessons learned.

The unit Becquerel means one disintegration per second. In the USA we use the unit Curries were one Currie is equal to 37 million disintegrations per second. A disintegration is a pop or count on a Geiger counter. For the average tea drinker this is pretty scary and most have know clue it the tea is really bad for them since they have know clue what a Becquerel means health wise.

The Nuclear Regulatory Commission in the United States came up with the Banana Dose to help people understand radiation. The Banana Dose (BD) is considered to be 520 picocurries. Using a handy dandy converter a BD is 19 Becquerels. So 500 Bequerels is a bunch of bananas. Since most people don't eat tea fresh, it is dried first which decreases its weight which would increase the radiation per weight. But only a small amount of dried tea leaves are used at a time and not all the radiation may be dissolved in the tea after steeping. Interesting puzzle.

After drying, tea weighs about 15% of what it did straight off the tree. So a kilogram of dried tea that tested at 500 becquerel per kilogram fresh, would test roughly 3333 becquerel per kilogram dried. The average strong tea bag contains roughly 3.3 grams per Wikipedia, so a mug of radioactive tea would contain about 11 Becquerels. What I am calling a mug is about 1/4 liter. So one liter of radioactive tea would be about 44 Becquerels worth of radiation, if all of the radiation in the tea leaves dissolved in the water. For infants, the Japanese limit per liter of water for radiation is 100 becquerels and adults 300. So at first blush, it does not look like the 500 Becquerel limit for fresh tea leaves is all that reasonable. One thousand Becquerels per kilogram of fresh tea would bring the per liter of consumed tea to 88 Becquerels, which should be safe even for infants if it were water, milk, juices or any other liquid.

I just used rough numbers, but it looks like the tea should be pretty safe. Since the 100 Becquerel limit is very conservative to begin with, I am pretty sure it is safe at 1000 Becquerels per kilogram of fresh tea leaves. I would think the Japanese government would test the tea as it is used before setting a weird limit on a product unless there is a common food use for fresh tea leaves. It would also be nice if they used the banana dose equivalent more when describing the safety of food stuffs.

It would also be interesting to know if vegetables were tested after rinsing. Most radioactive isotopes wash into the soil. Some are taken in by growing plants, but the majority remains in the soil. Cesium is absorb in the human body similarly to potassium. According some of the sources I have read, most of the cesium ingested passes through the body in about 100 days. Since it is chemically similar to potassium and sodium, that makes sense, but more could be retained.

While Fukushima is a dangerous mess, there is plenty to be learned from the misfortune of the Japanese people. Hopefully, a better understanding of ionizing radiation will be one of the lessons learned.

Thursday, May 19, 2011

Climate Predictions and Data Accuracy

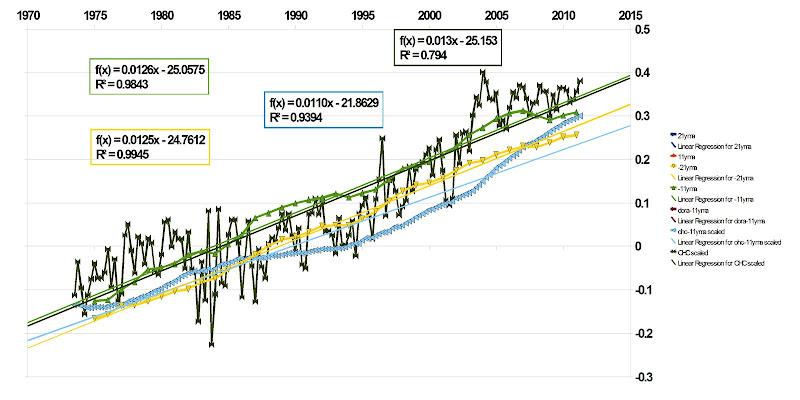

There is a lot of debate about how good the data is that is used to predict global warming. Fall et. al. has a paper out about the US surface stations, Tisdale and Tamino are battling over the Ocean Heat content, the models used are constantly challenged by skeptics, all this just leads to more disagreement between the players and the numbers. When I see this much noise, both in the data and the argument, my normal conclusion is the answer is near the middle.

My simple (crude if you prefer) look at things is just my way to get a handle on things. It is based on economic bubbles.

We have had quite a few economic bubbles. No one seems to see them coming until they arrive. I have used longer term moving averages for a long time to get a handle on the economy. A five, ten or larger number of year average gives you a simple measure. If what you are betting on is above the average, it is likely to correct to below the average. The more above, the more likely the correction will be sever. It works pretty good for economic trends. Climate is different because the data is not all that great.